I have a need, a need for snap

Igor Ljubuncic

on 27 December 2018

Tags: snapcraft.io

Are snaps slow? Or slower than their classic counterparts (DEB or RPM, for instance)? This is a topic that comes up often in online discussions, related to various containerised application formats, including snaps. We thought this would be a good idea to run a detailed experiment to see what kind of numbers we get when running software as snaps and classic applications, side by side.

Test setup

Performance analysis of desktop applications is not a trivial thing, because it includes the user perception and interactive responsiveness that are often subjective, and also highly dependent on the user’s hardware and software setup, including the concurrent system activity. For example, a browser experience will be different depending on the network throughput and latency. Heavily I/O bound applications will often perform better on systems with faster storage. CPU-intensive tasks and computational jobs will show favorable results on machines with a higher processor clock frequency.

We also need to add into the mix the graphics drivers as well as efficiency of the application code. Some programs can make good use of the multi-core architectures of moderns systems, while others only utilize a single core. The choice of the desktop environment as well as the kernel version are also likely to affect the results in some way.

Since there are an infinite number of permutations in this equation, the best way to check the relative performance of snap applications against their classic counterparts is to run them on the exact same system, which should eliminate the underlying hardware, desktop environment and other dependencies. While such a test is not comprehensive, it is reasonably indicative.

Our test setup includes:

- Comparative tests of several commonly used applications (repo vs. snap package).

- Profiling and analysis of runtime, CPU and memory usage (average and peak values).

- Several common desktop-use hardware platforms (to account for any non-software variations).

Test 1: Media Player

Video and audio playback is one of the most common desktop activities. To that end, we decided to analyze the behavior of VideoLAN (VLC), a highly popular cross-platform media player.

We had the program launched from the command line, playing a video file and then exiting (with the –play-and-exit flag). The full VLC version was used (the vlc binary and not cvlc). The player was launched 10 times in a for loop, with a 2-second pause in between each iteration. We did this a total of four times, twice with the standard VLC and twice with the snap VLC, once each against a 640×480 30FPS WebM video (vp8 and vorbis codecs) and once against a 1920×1080 30FPS WebM file, respectively.

We tested the total runtime of the script spawning the VLC instances as a rough indicator of the overall user experience, as this is what people will notice the most. In parallel, we ran dstat in the background, sampling CPU, disk, network, paging and system (interrupts and context switches) values. We also collected data with dstat with the system in the idle state to ascertain the level of background system resource utilization. We then further refined our analysis with individual runs and more detail look at the performance metrics, including the use of perf, a performance analysis tool.

A detailed list of the tools and scripts used here is available as a GitHub gist.

Platform: Kubuntu 18.04 (Ubuntu base + Plasma desktop)

It is important to note that there was a small version difference between the VLC application provided through the distro repositories and the snap package. Indeed, one of the advantages of snaps is that it allows users to obtain newer releases of their software, even if they might not be available through the standard package management channels. VLC was at version 3.0.3 (DEB) and version 3.0.4 (snap) at the time of the writing.

The full breakdown of the test system and its different components is listed below:

| Component | Version |

| Processor | 8 x Intel(R) Core(TM) i5-8250U @ 1.6 GHz |

| System | Kubuntu 18.04 (64-bit) |

| Kernel | 4.15.0-36-generic |

| Display | X.Org 1.19.6 |

| Screen resolution | 1920×1080 px |

| HD cached read speed | 13.1 GB/s |

| HD buffered read speed | 485 MB/s |

| KDE Plasma version | 5.12.6 |

| KDE Frameworks version | 5.44.0 |

| Qt version | 5.9.5 |

| Snap | 2.35.2 |

| Base | 16 |

Note: Initial startup time

We are aware that snap applications usually take longer to open than their conventional counterparts on first run. The time penalty is caused by the snap desktop integration tool, which initiates and prepares the application environment, including tasks like font configuration and themes. These can take time, and negatively translate into what users experience as a delay in the application opening.

We have invested a lot of time in profiling and optimizing the first run, and several significant changes have been introduced in snap 2.36.2. We are now shipping font configuration binaries in the core snap, which will automatically generate font caches during the installation and eliminate long startup times. This is an important topic, both for us and our users, and we will discuss it separately in another article, including detailed performance figures.

Note: Caching

We also need to take into account the caching of the application data and video file data in the memory. Running an instance of VLC (apart from the initial run discussed above) would require reading the video data from the disk. Subsequent runs would read this data from the memory. This can be verified by analyzing the major page faults count for each timed run.

The data below shows “warm” data runs. In other words, we performed an initial “cold” run, whereby the data is loaded in the memory, and in the ten loop iterations, the data would be read from memory. We will address the caching later on.

Results

The test results were quite interesting, and contained some rather important pointers. First, let’s take a look at the runtime figures.

| Total runtime (seconds) | VLC (DEB) | VLC (Snap) |

| 640×480 (13 seconds clip) | 155.5 | 157.5 |

| 1920×1080 (49 seconds clip) | 520 | 522.3 |

With the 13-second clip, we have a total of 10 iterations = 130 seconds plus 20 seconds pause (sleep), which translates into 150 seconds total expected runtime. We can see that the program startup and shutdown adds a relatively minimal overhead of about 5-7 seconds, roughly 0.5-0.7 seconds per iteration, or about 250 to 350 ms for each program startup or shutdown. The snap version performed about 2 seconds slower over the duration of the entire sequence, which translates into about 1.2% penalty compared to the DEB package.

With the HD clip, the total expected runtime without any application startup or shutdown time is 510 seconds. We incur a slightly longer penalty of about 10 seconds with the standard program version and about 12 seconds with the snap version. This translates roughly into 1 second extra time per cycle, or roughly about 500 ms for each program startup and shutdown.

Interestingly, the two-second difference remains, which indicates it is not related to anything inherent in the media player, and it’s cumulative penalty caused by snaps. With the 49-second clip, the overall difference translates into less than 0.5% more runtime. We see that snaps have consistently about 200 ms slower application initiation time. Crucially, this is less than the 400ms Doherty Threshold. Back in 1982, IBM engineers Walter J. Doherty and Arhvind J. Thadani established that interactive user experience begins to degrade when applications incur a response penalty of about 400 ms or higher. In other words, it is desirable for interactive software to have a response time below this threshold.

Dstat data

With dstat, we come to a range of other results and conclusions. The baseline user and system CPU figures are 0.83% and 0.34% respectively, with interrupts and context switches numbers at 405 and 1385. All values include underlying system baseline figures.

| Version | User CPU [%] | Sys CPU [%] | Interrupts | Context Switches |

| VLC (DEB) (640×480) | 7.95 | 1.44 | 1539 | 4826 |

| VLC (Snap) (640×480) | 4.23 | 1.57 | 1562 | 5091 |

| Version | User CPU [%] | Sys CPU [%] | Interrupts | Context Switches |

| VLC (DEB) (1920×1080) | 8.69 | 1.47 | 1516 | 5142 |

| VLC (Snap) (1920×1080) | 3.88 | 1.50 | 1380 | 4978 |

We can see that the system CPU utilization is slightly higher with the snap version than the standard application: less than 0.1% in real system figures. However, in relative terms, the system CPU usage was 9% higher with the snap test run than with the standard run with the shorter video, and about 2% higher for the longer, HD clip. This is due to the fact that the application startup and shutdown phases represent a larger part of the overall runtime. In general, this is true for any interactive software. For example, a 1-second application penalty is less noticeable over a 2-hour task than one that lasts only minutes or seconds.

The user CPU numbers favor the snap package. It tolled less CPU, both for the SD and HD clips, especially with the latter (again, since the part of the resource utilization is caused by the application initiation and termination, and is averaged over the total runtime).

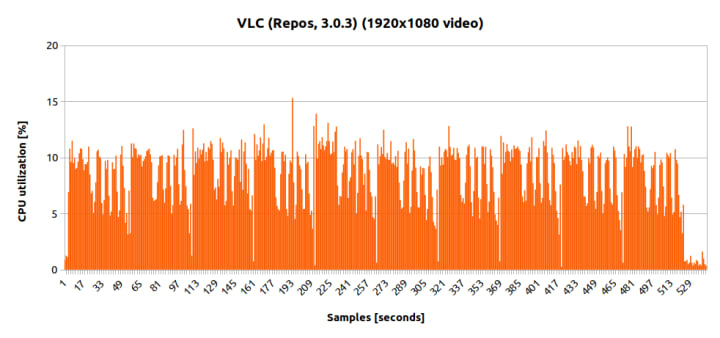

However, since averaging removes part of the overall information, it is also useful to look at the complete set of data points for a typical run. Here we can see that VLC startup uses about 10% CPU for both versions, indicating that the two applications, regardless of their packaging, behave more or less consistently. The major difference is in the CPU utilization during the video playback. We believe that this pattern results from the difference in the VLC version itself, and is not part of the underlying packaging mechanism. We will examine this hypothesis shortly.

Another interesting snippet of information is in the number of hardware interrupts the system performs over a typical run. This is more noticeable with the longer-running clip, where the snap version used about 9% fewer interrupts.

The amount of context switches per seconds is largely identical, which indicates there’s no difference in the overall interactive experience (barring the earlier 200ms launch time penalty). In general, computational (batch) tasks favor fewer switches and using as much of the allocated processor time, whereas interactive tasks should try to use as little of the allocated timeslice as possible.

Single run comparison

In order to understand the userspace CPU utilization differences better, we need to look more closely at the metrics for a typical single run. As we have seen earlier, the two versions of the media player mostly differ in the CPU utilization. The snap uses has a slightly lower max. memory usage, but it did have more minor page faults and context switchers. The other performance metrics are largely identical.

| Software version | Runtime [s] | CPU [%] | Max RSS [KB] |

| VLC 3.0.3 (DEB) | 13.5 | 49 | 149 |

| VLC 3.0.4 (Snap) | 13.7 | 16 | 113 |

Please note that the time command returns the CPU percentage values normalized to a single core (= 100%). The actual system usage can be derived by dividing by the number of available cores. For example, 50% single core usage on an eight-core system is actually in the range of 6.5%.

Another important point to mention is that during the runtime, the snap version of VLC printed out a message onto the standard output about the use of hardware acceleration. There was no such message for the repo-provided VLC 3.0.3. As this would explain the CPU differences, we tested the snap package without hardware acceleration (through the application options), and the CPU values were in the order of about 50%, identical to the DEB package.

libva info: VA-API version 0.39.0

libva info: va_getDriverName() returns 0

libva info: Trying to open /snap/vlc/555/usr/lib/x86_64-linux-gnu/dri/i965_drv_video.so

libva info: Found init function __vaDriverInit_0_39

libva info: va_openDriver() returns 0

[00007f4980c05a00] avcodec decoder: Using Intel i965 driver for Intel(R) Kabylake - 1.7.0 for hardware decoding

We were unable to use the hardware acceleration in the VLC 3.0.3 DEB package. Different manual settings did not change the application behavior. Using perf to profile the application behavior, we note that the i965 libraries (i965_dri.so) were used less than 0.1% of the overall CPU time. Indeed, the use of the graphics driver for rendering seems like the most probable explanation for the user CPU difference between the two versions. The use of the hardware acceleration requires further investigation.

“Cold” run

We also analyzed the performance of the VLC application with cached data cleared. This can be achieved by running the echo [1,2,3] > /proc/sys/vm/drop_caches command as root. The table below shows the results, with the subsequent “hot” run numbers in parentheses.

| Total runtime (seconds) | VLC (DEB) | VLC (Snap) |

| 640×480 (13 seconds clip) | 14.1 (13.5) | 17.5 (13.7) |

| 1920×1080 (49 seconds clip) | 50.6 (50.0) | 54.0 (50.2) |

For the DEB package, the disk read introduces a consistent 0.6 second delay. With the snap, the delay was also consistent, roughly 3.8 seconds. This is regardless of the file size, which indicates it depends in how snap launches and accesses the disk resource and not the performance of the underlying storage medium.

This value exceed the Doherty Threshold and may be noticeable by users when they run the application (after a relative period of inactivity, where any cached data is cleared).

Platform: Fedora Workstation 28 (Gnome desktop)

We repeated the test above running on Fedora 28 with the Gnome desktop environment. In Fedora, both the repo and the snap version of the VLC media player are consistent (3.0.4). The full breakdown of the system and its components is as follows:

| Component | Version |

| Processor | 4 x Intel(R) Core(TM) i3-4005U @ 1.7 GHz |

| System | Fedora 28 (64-bit) |

| Kernel | 4.17.19-200.fc28.x86_64 |

| Display | X.Org 1.19.6 |

| Screen resolution | 1366×768 px |

| Gnome version | 3.28.2 |

| Gnome Shell version | 3.28.3 |

| GTK version | 3.22.30-1 |

| Snap | 2.35.-1.fc28 |

| Base | 16 |

Results

The test runtime figures are largely consistent with the results obtained on platform 1 (Ubuntu). Due to hardware differences, the total test duration was longer, as the hardware platform 2 features an older processor and non-SSD storage. The snap version incurred a penalty of about 1.6 to 1.9 seconds compared to the RPM package, which is identical to the earlier figures. This translates into about 200ms delay per iteration, well below the Doherty Threshold.

| Total runtime (seconds) | VLC (RPM) | VLC (Snap) |

| 640×480 (13 seconds clip) | 159.2 | 160.8 |

| 1920×1080 (49 seconds clip) | 526.9 | 528.8 |

In Fedora, neither version used hardware acceleration. Consequently, CPU usage was about 42% both versions, with software rendering only. This also confirms the results obtained on the other hardware platform, under Ubuntu. It also highlights that the differences between repo-provided and snap packages are mostly due to specific architectural changes in the software itself. Max RSS usage and the amount of context switches was almost identical, and we can also observe the 200ms per-run delta between the two versions.

| Software version | Runtime [s] | CPU [%] | Max RSS [KB] |

| VLC 3.0.4 (RPM) | 13.8 | 42 | 139 |

| VLC 3.0.4 (Snap) | 14.0 | 42 | 133 |

If we look at the dstat results collected, again, we can see similar results. Both versions had near identical runtime figures. The results also agree with the previous findings obtained in the test on the Ubuntu platform.

| Version | User CPU [%] | Sys CPU [%] |

| VLC (RPM) (1920×1080) | 59.5 | 2.3 |

| VLC (Snap) (1920×1080) | 60.0 | 2.4 |

“Cold” run

The results obtained here are consistent with our previous findings, and they also align with what we observed on the other hardware platform when testing VLC. We can see that the cold run requires an extra 2.2 seconds for the snap version to run. Because of the slower storage medium, the overall operation took about 7.5 seconds for the DEB package and 9.7 seconds for the snap package. On slower systems, the time penalty will be less noticeable, but it is still above the accepted 400ms threshold. The results are consistent with the HD clip run, too.

| Total runtime (seconds) | VLC (RPM) | VLC (Snap) |

| 640×480 (13 seconds clip) | 21.4 (14.0) | 23.6 (14.1) |

Test 2: GIMP image processing software

We extended the testing to include GIMP, a free, cross-platform raster graphics editor. GIMP can also be used in a batch mode, allowing tasks to be executed from the command line. Initially, we performed a ‘sharpen mask’ manipulation on a 4096x2304px 2.2MB JPEG file and measured the difference in overall execution times.

With GIMP, we also used the perf performance analysis tool to examine the application behavior in detail, as well as any differences between the repo-provided program and the snap version. A similar test with VLC provided somewhat limited results as VLC is not designed to run as root (perf requires access to performance counters and tracepoints).

At the time of this testing, the following application versions were available: GIMP 2.8 as a DEB/RPM package from the repositories, and GIMP 2.10 as the snap package. This is a major version difference, and we will discuss the implications thereof below. In this section, we will only refer to the DEB package, as the results obtained both on the Ubuntu and Fedora platforms point to the exact same behavior (some small differences in actual figures due to processor and disk speed).

Results

Over 10 image manipulation iterations, we can see the that snap version of GIMP incurs a penalty of about 4.5 seconds, or roughly 450 ms per run. Even though there is a variation of up ±150 ms in single run results, the difference between the two versions remains consistent. No UI is presented in the batch mode.

| Total runtime (seconds) | GIMP 2.8 (DEB) | GIMP 2.10 (Snap) |

| Batch processing 4K JPEG | 59.9 | 64.4 |

Single run comparison

To understand this better, we also need to look at a single run in more detail. We can see there is a significant difference between the two versions of GIMP. Earlier, we saw only small differences between the two versions of VLC. Indeed, GIMP 2.10 comes with major improvements, including multi-threading and GPU-side processing, which explain the difference in performance metrics.

| Software version | Runtime [s] | CPU [%] | Max RSS [KB] | Minor FP | CS |

| GIMP 2.10 (DEB) | 4.0 | 109 | 134 | 76,000 | 56,000 |

| GIMP 2.8 (Snap) | 4.4 | 208 | 612 | 225,000 | 106,000 |

Single run comparison (large file and multi-threading)

Multi-threading offers performance improvements for longer-running CPU-bound tasks. For short-running tasks, the benefits may not be obvious, and may even result in a slight performance penalty, as we’ve seen above. To ascertain this, we also ran a second test, with an image manipulation task against a 16384x9216px 17MB JPEG file. Here we can see the snap version performing better than the repo-provided DEB package:

| Total runtime (seconds) | GIMP 2.8 (DEB) | GIMP 2.10 (Snap) |

| Batch processing 64K JPEG | 42.7 | 41.8 |

While earlier, a typical short run with GIMP in the batch mode resulted in about 10% difference in favor of the DEB package, with the longer, more CPU-intensive task, the snap package offered a slightly better total runtime figure (about 2% total, 13.5% relative). This is an indication that any snap-induced differences are largely minimal, and the major parameter is the underlying architectural difference in the software. Moreover, the snap-provided package can be optimized further, as it currently ships without BABL fast paths, which can accelerate GIMP GEGL:

Missing fast-path babl conversion detected, Implementing missing babl fast paths accelerates GEGL, GIMP and other software using babl, warnings are printed on first occurance of formats used where a conversion has to be synthesized programmatically by babl based on format description

Single run comparison (disk vs cached runs)

We also performed a similar test here, where we dropped the caches before running the software, followed a second run that would read data from memory. With the DEB package, the delta is similar to what we observed with VLC, about 0.5 seconds. With the snap package, the “cold” run was about 2.8 seconds longer than a “hot” run, consistent with our previous findings. Moreover, since no UI was rendered in these batch runs, the time penalty can be ascribed to the snap functionality, and not to any differences between the two versions of GIMP.

| Total runtime (seconds) | GIMP 2.8 (DEB) | GIMP 2.10 (Snap) |

| Batch processing 64K JPEG | 42.8 (42.3) | 44.0 (41.2) |

Perf results

Looking under the hood, we can confirm that there are no significant differences when running the snap package compared to the DEB one. If we look at the top five commands with the highest CPU usage during a typical run, in both cases, they are very similar. Again, the differences stem from the jump in the software version and not because of the use of the snap wrapper.

The DEB package top five hitters:

| Overhead | Command | Shared Object | Symbol |

| 2.80% | gimp | libglib-2.0.so.0.5600.1 | g_hash_table_lookup |

| 1.61% | file-jpeg | libm-2.27.so | __ieee754_pow_fma |

| 1.54% | gimp | libgobject-2.0.so.0.5600.1 | g_type_is_a |

| 1.37% | gimp | libgobject-2.0.so.0.5600.1 | 0x00000000000205f6 |

| 1.29% | gimp | libm-2.27.so | __ieee754_pow_fma |

The snap package top five hitters:

| Overhead | Command | Shared Object | Symbol |

| 3.05% | gimp | libglib-2.0.so.0.5600.2 | g_hash_table_lookup |

| 2.29% | gimp | gimp-2.8 | 0x000000000017a034 |

| 1.47% | gimp | ld-2.27.so | do_lookup_x |

| 1.36% | gimp | libm-2.27.so | __ieee754_pow_fma |

| 1.10% | gimp | libglib-2.0.so.0.5600.2 | g_mutex_unlock |

Conclusion

Differences between repo-provided packages and snap stems mostly from underlying architectural factors (hardware, software versions, enabled features) and not due to any major differences in the packaging format.

From this benchmark, we can conclude that, apart from the initial application startup time results (currently) in favor of the conventional software provided through the distribution repository over the snap packages, there is no significant difference between the two formats when running on either Ubuntu or Fedora.

Snaps do incur a consistent penalty of about 200 ms per “hot” application launch, which will most likely not be noticeable by most users. “Cold” starts incur a penalty of about 3 seconds over the conventional applications, and this will be noticeable to a majority of users. We are actively working on profiling and improving these results.

During the normal runtime of VLC, the behavior and system resource utilization are largely identical, save for the use of the hardware acceleration, which was only available for the Ubuntu VLC snap package. The ability to use latest software not currently available in conventional distribution channels, like the software repositories, can also be rather advantageous to users, as in the case of GIMP 2.10, for instance.

Moreover, we believe that software developers should have an easy, flexible framework to allow them to test, profile and benchmark their products, as well as troubleshoot any issues that may arise during the build and publication of snaps. To that end, we will also discuss various tips and tricks for how to simplify and improve the experience with snapcraft in a future article.

Your comments and feedback are appreciated. If this is something that interests you, or you have additional pointers and suggestions, I encourage you to join the discussion forum.

P.S. No Top Gun memes were harmed in the writing of this article.

Photo by Marc-Olivier Jodoin on Unsplash.

Ubuntu desktop

Learn how the Ubuntu desktop operating system powers millions of PCs and laptops around the world.

Newsletter signup

Related posts

Creating Snaps on Ubuntu Touch

This article was written in collaboration with Alfred E. Neumayer of the UBports Project. Tablets, phones and current technology’s capabilities are...

Managing software in complex network environments: the Snap Store Proxy

As enterprises grapple with the evolving landscape of security threats, the need to safeguard internal networks from the broader internet is increasingly...

We wish you RISC-V holidays!

There are three types of computer users: the end user, the system administrator, and the involuntary system administrator. As it happens, everyone has found...